Metadata Driven Pipelines (Full Load): The Fabric Modern Data Platform

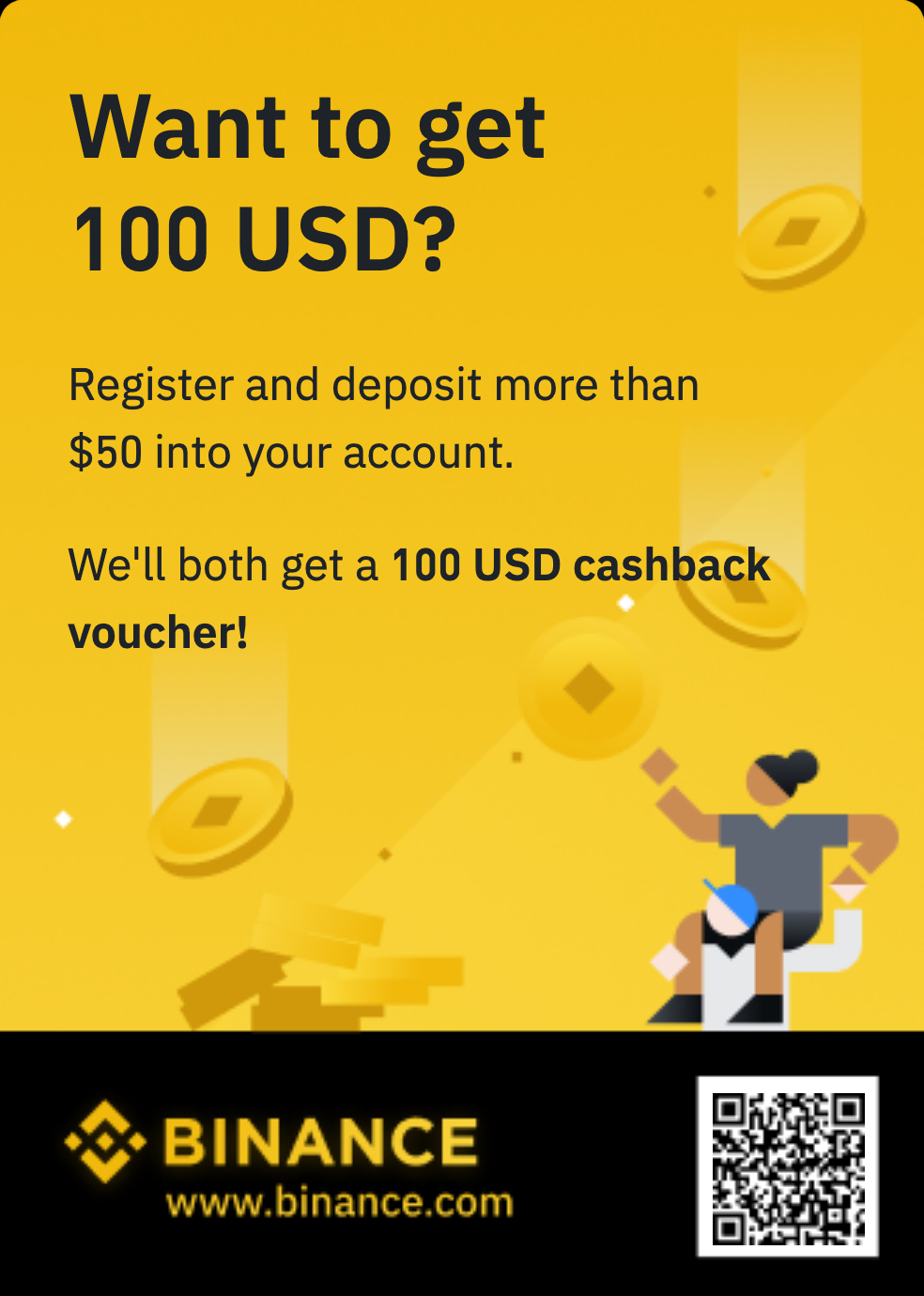

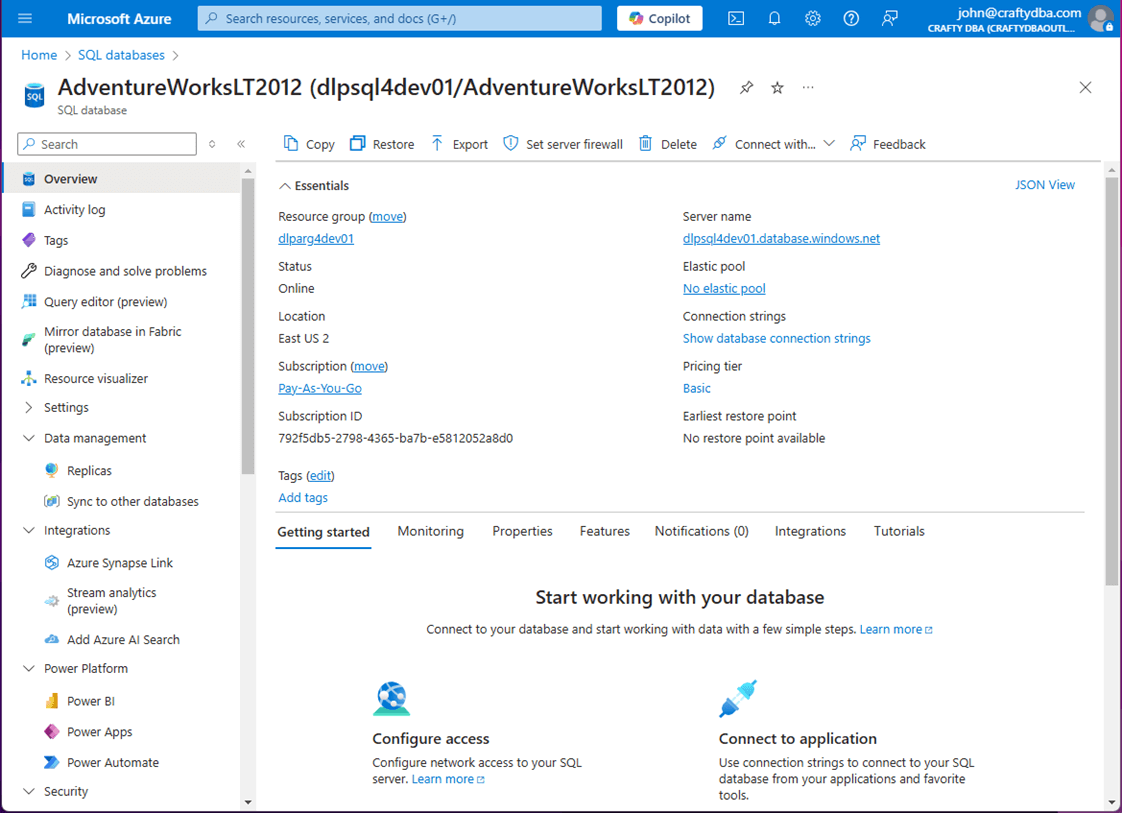

To solve a complex business problem, one needs to break the process into smaller solvable steps. Today, we are going revisit the Modern Data Platform for Fabric Warehouse. Our first problem to solve is a full data load pattern from a relational database management system (RDBMS) into the Fabric Warehouse. The solution creates the framework that will be used in the next few articles. How can we use data pipelines to land full copies of data into raw zone tables?Business ProblemOur manager has asked us to create data pipelines to copy tables from the Adventure Works LT database located in Azure SQL into a Warehouse in Microsoft Fabric. Last time, we used Generative AI to create a T-SQL notebook that populated a raw schema with target tables. The focus of today’s article is to show how a metadata design can reduce the amount of code needed to accomplish such tasks.Overall DesignIn this section, we will talk about the source and target systems as well as the code for Extracting and Loading data. The source system is an Azure SQL Database. The image below shows the Adventure Works LT 2012 database that is using basic Database Transaction Units. Please read this Microsoft article for details on the Basic, Standard and Premium tiers.The target system is a Fabric Data Warehouse named “dw_advwrks_analytics.” The image below shows the objects which are in the “ws-dev-advwrks-analytics” workspace.The table below has a description of each object.nb-advwrks-2012-ltT-SQL NotebookNotebook created by Generative AI script.nb-make-meta-dataT-SQL NotebookThe metadata tables are used as program inputs.nb-make-refined-layerT-SQL NotebookDetails revealed in future article.pl_full_load_stepData PipelineFull table copy from source to destination.pl_exec_job_stepsData PipelineFor given job, start and stop steps, execute the full load data pipeline.pl_sched_daily_loadData PipelineCall to execute job steps by job id.The last piece of the puzzle are the schemas used to store our work. The image below gives you a glimpse of the dynamic code that will be used to CTAS tables in the staging area and create views in the refined quality zone. These details will be covered in the fourth article.The above image shows the schemas used in the Fabric Warehouse. The table below has a description of each schema.Schema NameDescriptionmetaThe metadata tables are used to control the data pipelines.rawA complete copy of the data.stage0Contains the most recent incremental data.stage1Copy of raw table at point in time x.stage2Copy of raw table at point in time y.refinedViews that point to a given stage.tmpBusiness logic and data aggregation.The diagram below shows the final design at the end of this series of articles. Today, we are focusing on the full load pipelines using a metadata driven design pattern.The above design uses staging tables so that we can rebuild the Fabric Warehouse without any impact on the current views and current stage tables. This reduces the amount of downtime experienced by the end users. Once the warehouse is ready, a quick update of the views switches the users from one stage to the next.Manage ConnectionsIn Azure Data Factory, our first task was to setup linked services to the source and destination data. In Microsoft Fabric, this task has been moved to the manage connections and gateways section of the service. The image below shows a connection called “con4advwrks4paas” has been created using basic security, user name and account password. Connecting to cloud data sources within Fabric is quite easy.Some functionality has been lost with this change. For instance, in Azure Data Factory we could parameterize the linked services so that multiple database can be access with one connection. In Fabric, this functionality has been moved into the copy activity. Other options such as trust server certificate are missing from the dialog box. We will talk about how this impacts our data gateway setup in a future article. The image above shows connections to storage containers in all three cloud vendors (Azure, GCP, and AWS).Static Full Load PipelineThis is not the first time I have written about metadata driven pipelines. Please see this prior article on this design pattern with Fabric Lakehouse. Today, I am going to focus on what is different. While the image below shows a single copy activity, we have three different logging options. First, we log the start of the pipeline. After the copy activity, we either log the success of the action with a record count or log the failure of the action with an error message.The above image shows the activity named “act_gen_script_log_start.” Please note, it is important to set the timeout. I did not do this change on all activities. So, it is up to the reader to perform this action themselves. The image below shows the script activity performing an insert. Yes, I am referring to a pipeline parameter in this example. But if you want to hard code each and every action, replace the parameter with static text.The copy activity is the work horse of data pipelines.The above image shows the general properties of copy activity. Please note the default time out is 12 hours. The image depicted below shows the settings for the source data. Both the schema name and table name are hard coded.Any object that exists in Microsoft Fabric automatically shows up as a connection. Use the drop-down menu to find our target warehouse. Again, the destination schema and table name are hard coded in the activity.By default, staging in Microsoft Fabric is enabled. In the first step, the source data is copied and stored as a temporary parquet file in staging. This file is ingested into the data warehouse table in the second step. Please see Microsoft documentation for more information about the copy activity in Fabric.It is especially important to capture lineage if possible when creating pipelines. In this framework, the total number of copied records are stored in the Log table upon successful execution.Equally important is to capture error messages for debugging and re-running full load steps. The image below depicts storing of the error message in the Log table.Since logging is a critical component of data pipelines, enclosed is a cell from the T-SQL notebook that creates the meta table.-- -- Delete existing table -- DROP TABLE IF EXISTS [meta].[Logs]; GO -- -- Create new table -- CREATE TABLE [meta].[Logs] ( [job_id] [int] NULL, [step_id] [int] NULL, [program_name] [varchar] (128) NULL, [step_description] [varchar] (128) NULL, [action_type] [varchar](128) NULL, [additional_info] [varchar](4000) NULL, [action_timestamp] [datetime2](6) NULL ); GO If we kick off the “pl_full_load_step” data pipeline, we can see it was successful.It is always important to test our code. The image below shows the parent pipeline “pl_exec_job_steps” calling the child pipeline we just created “pl_full_load_step.” This screen shot was taken from a later point in time in which I changed the name to “pl_data_load_step” to accommodate both full and incremental load patterns.To recap, we worked on a full load data pipeline for mostly static entries. This is only useful when you have a handful of tables. If we had five hundred source tables to ingest into the warehouse, we would end up with five hundred data pipelines. It would be a maintenance nightmare if we wanted to change the logging message. In the next section, we will talk about how metadata driven designs are the way to go.Dynamic Full Load PipelineThe image below shows the metadata that I came up with for the Adventure Works LT 2012 database. Basically, we can copy this JSON document as text and paste it in as the default value for the pipeline parameter.Since jobs and steps are critical components of data pipelines, enclosed is a cell from the T-SQL notebook that creates the meta table. Jobs group a bunch of steps together. For instance, we might want to have one job bring over all the [dbo] tables while another job bring over all the [SalesLT] tables. Thus, we have job 1 (dbo) with two steps and job 2 (SalesLT) with ten steps.-- -- Delete existing table -- DROP TABLE IF EXISTS [meta].[Jobs]; GO -- -- Create new table -- CREATE TABLE [meta].[Jobs] ( [row_id] [int] NOT NULL, [job_id] [int] NOT NULL, [step_id] [int] NOT NULL, [step_description] [varchar] (128) NULL, [load_type] [varchar](128) NULL, [src_type] [varchar](128) NULL, [src_database] [varchar](128) NULL, [src_schema] [varchar](128) NULL, [src_table] [varchar](128) NULL, [src_query] [varchar](8000) NULL, [dst_type] [varchar](128) NULL, [dst_database] [varchar](128) NULL, [dst_schema] [varchar](128) NULL, [dst_table] [varchar](128) NULL, [is_enabled] [bit] NULL ); GO Given the job design, the [SalesLT].[Address] table is step 1 of job 2.-- -- Job 2, Step 1 -- -- del row DELETE FROM [meta].[Jobs] WHERE [row_id] = 3; GO -- add row INSERT INTO [meta].[Jobs] ( [row_id], [job_id], [step_id], [step_description], [load_type], [src_type], [src_database], [src_schema], [src_table], [src_query], [dst_type], [dst_database], [dst_schema], [dst_table], [is_enabled] ) VALUES ( 3, 2, 1, 'Daily full load for table SalesLT.Address', 'full', 'IaaS - SQL Server 2022', 'AdventureWorksLT2012', 'SalesLT', 'Address', 'select * from SalesLT.Address', 'PaaS - Fabric Data Warehouse', 'dw_advwrks_analytics', 'raw', 'Address', 1 ); GO The completed notebook and code is enclosed as a zip file at the end of the article. The image below exhibits the default parameter for the Address table.We need to go back and change the hard coded source schema and source table to dynamic parameter values.Since this is a full load pattern, we need to truncate the source table before loading it. We can use the expression language of the data pipeline to concatenate both static text and dynamic parameters to produce the correct T-SQL syntax supported by Fabric Warehouse.If we re-execute the “pl_full_load_step” pipeline, we get the same results in the log table. Instead of a static program, we can have a dynamic program that is based on input parameter that is a JSON document.Dynamically Execute the Job PipelineThe “pl_exec_job_steps” parent pipeline is how the developer can execute a series of child pipelines, “pl_full_load_step,” for tables in the source system. The design of this data pipeline allows for flexible scheduling. The job parameter should always reflect the group of steps you want to execute. If the alpha and omega parameters are zero, the complete set of steps are executed in sequential fashion. What happens if we want to break the job into two pieces and execute them in parallel? Just create a scheduling data pipeline and call the parent pipeline two times with the correct parameters: {2, 1, 5} and {2, 6, 10}.The if activity is used to set a variable named “sql_stmt.” If the alpha and omega parameters are used, we need to add them to the resulting SELECT statement. Also, we only want steps that are enabled. This allows us to disable unused or one time used steps. If the parameters are not used, the two equality statements can be removed from the final T-SQL statement. The for each activity keeps track of the number of failed pipelines and logs the final result using an if condition activity. A future enhance would be the addition of a fail activity if you want it to show up in the monitor logs.Let us evaluate the two jobs that we have defined. The image below reveals job 1 which contains the child pipeline executions for the two tables in the [dbo] schema.The image below indicates the successful execution of job 2 that fully copies the ten tables in the [SalesLt] schema by calling the child data pipeline.In a nutshell, the meta data driven design has reduced the amount of code needed to move data from the source to the destination tables. If we hard coded the data pipelines, we would have need one for each table. Thus, a total of 12 pipelines. Instead, the parent and child data pipeline results in two artifacts in our code repository.Table DetailsThere are some utility functions in the meta schema. The view named “uvwUserTableInfo” shows the definition of a given table.We can look at the data in the raw schema for the Address table using a remarkably simple select statement.Let us complete this section with two callouts to the product group at Microsoft that works on the Fabric Warehouse. First, the OBJECT_ID() function is part of all services that execute T-SQL except for Fabric Warehouse. Please up tick this suggestion since it reduces the need to look up the object id.The OBJECTPROPERTYEX() function is the quickest way to get record counts from SQL Server. This function is supported by Fabric Warehouse but does not report the correct record counts. The above image shows the incorrect totals. Please up tick this suggestion since it reduces the need to execute a SELECT statement for each table.Of course, if we ask how many steps we have in our job table using a SELECT statement, the correct result of twelve is returned.SummaryToday, we revisited the Adventure Works LT 2012 schema that resides in an Azure SQL database. The first attempt at the full load data pipeline into Fabric Warehouse used a hard coded approach. This results in redundant code. If we have N tables we must write N data pipelines. This can be a maintenance nightmare if we must change the activity setting in all N pipelines. The second attempt used a pipeline parameter. Since the parameter is a JSON document, multiple data points can be transmitted at one time to the child pipeline.The next step in a metadata driven design is to create a job step executor pipeline. I have found out over time and with experience; clients always want to change the job schedule. Having the flexibility to break the job into pieces that can be scheduled in parallel is important. This allows the data to load into the warehouse faster at the expense of using more computing resources.Finally, the quality assurance team will start off by comparing the record counts from the source and target systems. Next, they will compare partial data sets to make sure the unexpected column transformations do not occur. The quickest way to get record counts is to use metadata functions that are available in T-SQL. However, one key function is missing, and one function does not return the correct results. Please up-tick these Fabric ideas so that the product team fixes this missing functionality.The enclosed zip file contains all the code from this article. The T-SQL notebooks can be imported directly into a Fabric Workspace. As for the data pipelines, create a new blank pipeline with the same name. Edit the JSON behind the pipeline and replace it with the supplied JSON containing the completed pipeline. Of course, the connections will have to be manually changed to reflect your use case.Next time, I will be talking about how to deploy and configure the data gateway for on-premises SQL Server or in-cloud SQL Server instances without a public endpoint.